Simplifying Risk Adjustment

By Syed Muzayan Mehmud

Health Watch, May 2025

Health care risk adjustment methodologies have become increasingly complex, largely due to the belief that complexity enhances accuracy. However, a disconnect exists between how health care risk is assessed and how payments are adjusted.

This article explores the stark difference between measuring the accuracy of a risk assessment model at a member level and that of a risk adjustment mechanism at the health plan level. It shows that a highly simplified approach that does not use diagnosis codes can have similar accuracy on risk adjusted payments to models that do use codes. These findings suggest that accuracy is not a barrier to developing simpler risk adjustment methodologies, which can improve payment accuracy and reduce the financial incentive for coding diagnoses.

The Disconnect

Accurate risk adjustment is crucial for ensuring health plans receive appropriate compensation for the risks they manage, discouraging risk selection and fostering market stability. Risk adjusted payments are critical for health plan financials and a significant portion of the premium they collect.[1]

While model accuracy is not the only criterion in the development of a risk adjustment methodology, it is an important one that has guided the evolution of these models toward more complexity. We have added more condition categories, hierarchies within conditions, diagnosis codes (Dx), National Drug Codes (NDCs), different sets of model coefficients for population subgroups, condition interactions and so on.

However, more complexity comes at a significant cost. There is the cost to administer the program and a cost borne by health plans and providers with limited capacity to analyze and keep up with an elaborate method. There is also a large cost to society in the form of large potential overpayments due to differential diagnosis coding; large health plans’ investments in finding more codes get passed on to consumers in the form of higher premiums. In the Medicare Advantage (MA) program alone, overpayments due to overcoding are estimated to cost taxpayers billions of dollars.[2]

Has a perceived increase in the accuracy of risk adjusted payments been worth the complexity and added costs to society?

Health care risk adjustment accuracy is typically measured using member-level measures such as R-square (R2) or predictive ratios for subgroups such as demographic cohorts or disease conditions.[3]

However, there is a significant disconnect in how we measure accuracy and how risk adjustment really works. We don’t transfer payments between members of health plans or cut checks to demographic or specific disease cohorts. Risk adjustment takes place at the health plan level. These plans typically have thousands of members, with a varying mix of demographics, disease conditions and so on. The measurement of payment accuracy should not rely on member-level metrics or predictive ratios for selected subgroups, but rather on calculating the difference between predicted risk for a health plan and the actual risk incurred.

A Better Way

Once we look at accuracy in terms of how entities get risk adjusted instead of how members are risk scored, we find that far simpler models approach the accuracy of more complex ones.

Before seeing how this can work with real data, let’s spend a little time thinking through how this can ever work. It may seem counterintuitive that a less accurate model at the member level can perform just as well at the health plan level, where risk adjustment payments are actually made.

The key to understanding this is that error dynamics are different when we are thinking of total variability within a population vs. the variability within nonrandom groups of members. If risk adjustment models were perfect (i.e., R2 of 1.0), then their predictions would be perfect regardless of whether we measure them at the member or group level. But these models are not perfect. The best ones have large magnitudes of errors, with R2 well below 0.5. This leaves the door open to unexpected results when we roll up predictions and associated errors from a member to a group level.

A simple example is worth a few paragraphs. Let’s consider the following illustration, which also foreshadows how we will analyze real data.

Table 1 shows data on five hypothetical individuals. Their annual expenditure is in the Actual column. These expenditures are not in dollar terms but are scaled so they average to 1.0 over the population. There are predictions for the risk of these members from two different models, Prediction 1 (P1) and Prediction 2 (P2). How do we tell which model predicts expenditures better?

Table 1

Member-Level Data

We can calculate measures such as the coefficient of determination (R2) and mean absolute error (MAE). In this example, P1 has an R2 of 70% and P2 has an R2 of a distant 26% by comparison. Further, the MAE for P1 is significantly better (22%) relative to P2 (29%). MAE is a better measure for risk adjustment payment accuracy as it is the difference between what was expected and what happened. By either measure, prediction 1 is clearly the better model.

Or is it? Two of our hypothetical individuals belong to plan 1 and three to plan 2. This is indicated in the Plan column. Recall that we don’t make risk adjustment payments to individuals; we make them to health plans. We need to roll up these data at the plan level[4] and recalculate our performance metrics. Once we do this, we get the results in Table 2, and the tables have turned! P1 has an R2 of 66% on a group basis, whereas P2 has a staggering R2 of over 99%! Further, the MAE for P1 is now higher at 21% relative to P2 at just 2%.

Table 2

Plan-Level Data

In this illustration, it would be a mistake to choose P1 over P2 as the better model. On the metric that ultimately matters—payment accuracy to risk adjusted entities—there is no contest. We risk making a similar mistake within large-scale health care risk adjustment programs if we don’t align the measurement of risk adjustment accuracy with how these payments are made.

Testing with Data

Staying with the earlier illustration, let’s examine actual health care costs that are scaled to 1.0 across more than 90,000 real-world observations from the Agency for Healthcare Research & Quality’s (AHRQ’s) Medical Expenditure Panel Survey (MEPS). The data are for years 2017–2019, including households with Medicare, Medicaid and commercial coverage.

Representing the status quo method, there are risk scores from the Centers for Medicaid & Medicare Services (CMS) Hierarchical Condition Category (HCC) model—the same model that is used to adjust payments plans in the Affordable Care Act (ACA) program. This model uses demographic information such as age, gender and diagnosis codes, as well as prescription drug codes. Thousands of diagnosis codes and NDCs are organized into more than 150 HCC indicators, including hierarchies, condition interactions, pharmacy flags and so on.

For my alternative approach, we will use a simple method based on age, gender and just 12 health status survey questions. The questions are along the lines of what most of us experience when filling out paperwork in a doctor’s office. These include “Have you been diagnosed with cancer?”, “Do you have diabetes?”, and “Do you have difficulty walking up a flight of stairs?”

An approach that utilizes detailed diagnosis codes will be most accurate in a concurrent application.[5] I chose this comparison to give the alternative approach its stiffest test.

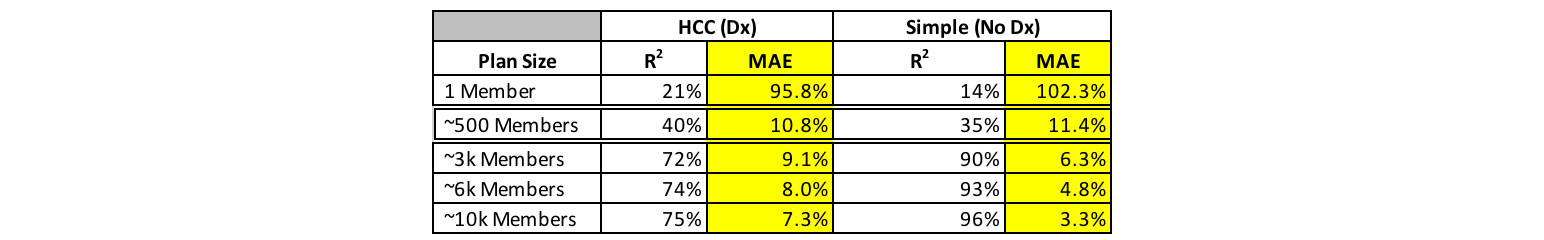

The member-level performance from the two models is presented in Table 3.

Table 3

Member-Level Comparison of HCC (Dx) and Simple (No Dx) Predictions

As expected, the highly complex, diagnosis-based HCC risk adjustment model performs well, with an R2 of more than 20%. The R2 of my simple model isn’t too far behind at 14%. Note that the performance of the diagnosis-based HCC model used in the Medicare Advantage program is around 11%.[6]

Next, we assemble hypothetical health plans from the data that look just like real health plan entities, with typical group sizes as well as a distribution of expenditures that is typical for real-world plans. Then, we recalculate our metrics as in the earlier illustration.

Table 4 shows the R2 and MAE results for the two very different approaches by plan size: the diagnosis (and NDC) code–based HCC model, and the ultra-simple model that relies only on demographics and a few questions about a member’s health status.

Table 4

Plan-Level Comparison of HCC (Dx) and Simple (No Dx) Predictions

We see the relatively lower performance of the simple model replicated for a plan size of 1 (which is equivalent to the prior table for individuals). As soon as we started measuring payment accuracy between groups of people, the performance gap closes between the diagnosis-based and the simple model. In fact, the simple model tends to perform better.

This is a striking result. A simplistic model relying on 12 questions about health status outperforms a model relying on more than 10,000 diagnosis codes and 10,000 NDCs. There are two reasons for this, both of which accrue from a central point of this article. One, we need to measure model accuracy in a way that represents groups of members that are risk adjusted, and crucially, we need to build our models that way. We get significantly better payment accuracy when we fit our models to groups of individuals that are representative of health plans rather than to individual members.

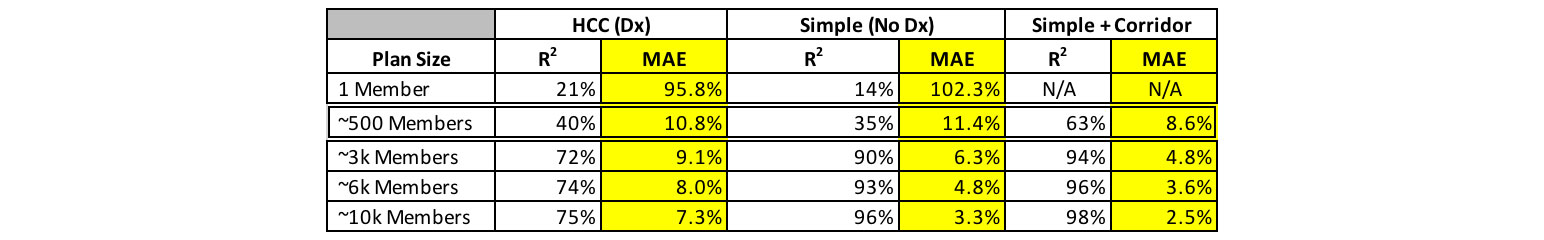

Note that for group sizes around 500 members, the more complex diagnosis code–based model performs slightly better. More than 99% of the members in MA or ACA are in plans with higher membership, but we still need to think about how to improve payment accuracy for tiny health plans, which are relatively few. One relatively simple way to accomplish this is through a two-sided risk corridor. In Table 5, we implement a 25% corridor, where a health plan is credited 25% of the difference between actual aggregate risk and that estimated by the model. Such an approach can be implemented below a very low threshold of plan size and is likely not necessary for larger plans that comprise almost the entire membership in the MA or ACA programs.

Table 5

Plan-Level Results Including the Simple Method with a Risk Corridor

Further Exploration

The way we measure the accuracy of risk adjustment models informs policy and the direction of the development of such models. We can do better at aligning the way we measure model performance to how these models work in practice.

This article demonstrates that we need not chase complexity on the grounds of risk adjusted payment accuracy. There is an enormous potential for desirable outcomes if we can simplify risk adjustment methodologies. The specific option discussed here is self-reported health status with no reliance on health care claim data.

Toward the objectives of simplicity, mitigating gaming and higher payment accuracy, several areas are ripe for further research and development.

By conducting research into candidate localities for a pilot MA program, we can run a parallel calculation and test the effectiveness of a health status survey compared to the current health care claim data and diagnosis code–based approach. To address concerns about gaming survey answers, such a survey should be centrally administered and controlled. This will go a long way toward leveling the playing field. We need research into how technology can be used to accomplish this efficiently. Further research should focus on identifying the most predictive and least manipulable survey questions, as well as developing robust mechanisms for centralized, unbiased survey administration.

We already have marketing regulations designed to prevent discriminatory practices, including member selection based on health conditions.[7] Further research into appropriate regulations that would prevent gaining an unfair advantage for health status survey completions (e.g., disallowing coaching, incentives, etc.) would be helpful.

We also need research into additional resources that could be deployed by the central administering authority to mitigate uneven survey responses where necessary (e.g., multilingual support, access to technology, educational programs).

Members have concerns about privacy. In the MA program, detailed data are already centrally collected on members in an identifiable way. In the ACA program, detailed member-level data are collected centrally in the EDGE program but is somewhat deidentified. This article advocates for a group-level approach, so central data collection could be completely deidentified, lessening privacy concerns in this approach vs. today’s reality. We don’t care about linking health status survey responses to an individual or even a member identifier; we just care about aggregating those responses up to the health plan level.

Along with measuring accuracy, the way we build and calibrate a risk adjustment model depends greatly on whether we take a member-level or a group-level view. More research is needed to analyze group-level risks with real-world characteristics and select variables and calibrating models on that basis.

Of course, model accuracy is not the only consideration in evaluating the success of a risk adjustment program. Another is the resources required to execute a given methodology. A far simpler risk adjustment approach will be easier to administer. The efficiencies gained could unlock resources for researching other desirable features of risk adjustment policy; for example, using social determinants of health toward improving health care equities in vulnerable populations.

An important limitation of the data used in this analysis is that all the information is self-reported. Participants in the MEPS survey were asked about each hospitalization and outpatient or doctor’s visit. Professional coders then translated detailed survey responses from a medical visit into diagnosis codes and NDCs. The general health status questions used in my alternate approach were mostly yes/no questions from a different part of the survey and were representative of how this process might work in practice. As noted earlier, there is evidence here that self-reported data can explain a large amount of variation in health care spending, perhaps even greater than what we currently observe in a program like MA. Still, we need to do a pilot study to assess the applicability and generalizability of the results presented in this article.

Conclusion

The two main points in this article are:

- There are benefits to aligning how we measure health care risk adjustment accuracy with how these adjustments are actually administered.

- If this alignment is achieved, a far simpler approach becomes feasible—one that could not only improve payment accuracy but also eliminate the need for health care claim data.

The second point is important. Tens of billions of dollars move to or between health plans in each of the MA and ACA programs each year.[8] As long as health care claim data drive risk adjustment, incentives will favor measuring differential data quality rather than the actual health status of members. This undermines the purpose of risk adjustment.

A simplified risk adjustment methodology has the potential to address these issues while improving payment accuracy, presenting a transformative opportunity. The proposed approach offers a practical path forward in improving the efficiency and fairness of health care payments, while unlocking vast resources that can be put to far more productive use in health care rather than chasing codes.

To assess the feasibility and impact of this simplified methodology, a pilot program should be conducted alongside the existing approach. This would allow for real-world testing and refinement of the model, as well as provide insights into potential implementation challenges and solutions.

Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries, the editors, or the respective authors’ employers.

Syed Muzayan Mehmud is a principal with Wakely Consulting Group. Syed can be reached at https://www.linkedin.com/in/smehmud/.

Endnotes

[1] Centers for Medicare and Medicaid Services, “Summary Report on Permanent Risk Adjustment Transfers for the 2022 Benefit Year,” CMS, June 30, 2023, https://www.cms.gov/files/document/summary-report-permanent-risk-adjustment-transfers-2022-benefit-year.pdf.

[2] Committee for a Responsible Federal Budget, “Reducing Medicare Advantage Overpayments,” CFRB, February 2022, https://www.crfb.org/sites/default/files/managed/media-documents2022-02/HSI_MAOverpayments.pdf; Suzanne Murrin, “Some Medicare Advantage Companies Leveraged Chart Reviews and Health Risk Assessments to Disproportionately Drive Payments,” US Department of Health and Human Services, Office of Inspector General, September 13, 2021, https://oig.hhs.gov/reports/all/2021/some-medicare-advantage-companies-leveraged-chart-reviews-and-health-risk-assessments-to-disproportionately-drive-payments/; Richard Kronick and F. Michael Chua, “Industry-Wide and Sponsor-Specific Estimates of Medicare Advantage Coding Intensity,” SSRN, November 11, 2021, https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3959446.

[3] Centers for Medicare and Medicaid Services, “Report to Congress: Risk Adjustment in Medicare Advantage,” CMS, December 2021, https://www.cms.gov/files/document/report-congress-risk-adjustment-medicare-advantage-december-2021.pdf.

[4] This includes rescaling to 1.0 over the plan level.

[5] Geoffrey R. Hileman, Syed Muzayan Mehmud and Marjorie A. Rosenberg, “Risk Scoring in Health Insurance: A Primer,” Society of Actuaries, July 2016, https://www.soa.org/4937c5/globalassets/assets/files/research/research-2016-risk-scoring-health-insurance.pdf.

[6] Centers for Medicare and Medicaid Services, “Report to Congress.”

[7] Claire Noel-Miller and Jane E. Sung, "New Medicare Advantage Marketing and Sales Rules Will Help Better Protect Consumers” [blog], AARP, September 19, 2023, https://blog.aarp.org/thinking-policy/new-medicare-advantage-marketing-and-sales-rules-will-help-better-protect-consumers.

[8] Centers for Medicare and Medicaid Services, “Summary Report.”